How does AI create music

Automatic music generation is a topic that dates back to 50s, but only recently has AI generated music become advanced enough to let us believe that it has a potential to become indistinguishable from human creations in the near future.

There are multiple approaches that can be utilized to perform the task of music generation, such as Markov chains, grammar-based and rule-based models, and deep learning, which currently achieves the best results in the field and is the subject of extensive research conducted by such companies as Google with their Magenta project or OpenAI with MuseNet and Jukebox projects, and many others.

Ways to tackle this challenge can vary significantly, depending on the way scientists decide to approach the challenge and on expected outcome. What is supposed to be created? A simple monophonic melody? A classical piano piece? A catchy pop song? A counterpoint accompaniment for a provided melody? Or maybe human-like vocal?

Let’s go through several approaches to modern AI music generation.

Data representation

The first thing to consider is how to represent music in a form that can be understood by a machine. There are two common approaches to representing music for machine learning:

Audio representation – music is represented as audio signal, which can be either raw waveform, or transformed (most frequently in a spectrogram form). Audio representation requires significantly greater resources than symbolic representation, but is able to preserve much more detailed information about music, such as the sound of an instrument or of a human voice. With rising computational power of modern computers, this approach is gaining popularity.

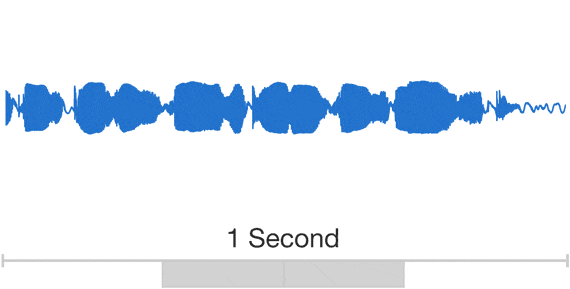

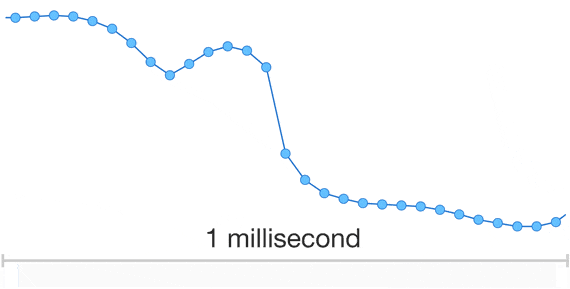

Raw audio representation (waveform) means representing sound as a sound pressure variation in a time domain. To digitalize the sound, tens of thousands of samples are taken every second. Waveform is the most accurate, but most resources-consuming representation of sound.

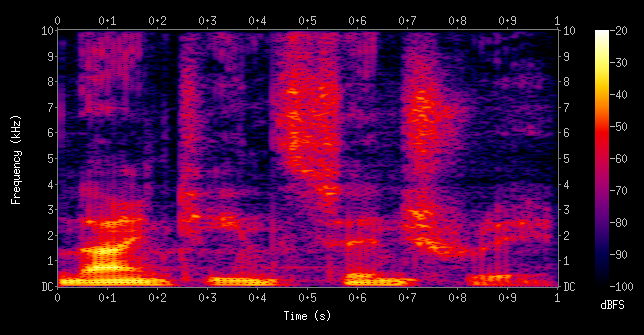

Spectrogram is a representation of frequency spectrum changes over time. It is generated by applying Fourier transform on a waveform in order to extract sound intensity in various frequencies. Machine learning scientists often use mel-spectrograms, which use mel scale in place of Herz. Mel is a logarithmic mapping of frequency created for better representation of human perception of sound pitch, as pitch perception changes logarithmically in relation to frequency. Spectrograms’ advantage over waveforms is smaller time resolution, but on the other hand they contain more information in each timestep. Spectrograms were used due to their compatibility with image processing models, however newer solutions seem to prefer waveforms.

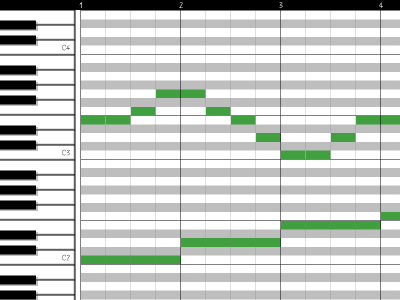

Symbolic representation – the piece of music is treated as a set of events, such as notes, rests, chords, etc., which occur sequentially or simultaneously. Each event has several properties assigned, such as instrument, velocity, pitch, length, etc. It can be MIDI format, piano roll, text format, and many others. Through the history of AI music generation it was significantly more popular than audio representation, due to lower resources required for processing. On the other hand, it comes with limitations because many nuances of music performance are lost in this approach. What is generated by the model in this approach is not the sound of music itself, unlike in audio representation. It is rather a sheet, an instruction what should be played. It’s worth mentioning that this approach does not allow us to generate human voice.

Deep learning models

Now, when musical data can be understood by a computer, the next step is to create the machine learning model. I assume that you are familiar with elementary concepts of machine learning, so there will be no elaboration on the basics of neural networks. The basic idea of the deep learning generative model for music is simple – it utilizes a neural network based model, a few of whose possible architectures will be described later, which is used to generate music in two consecutive steps: the training phase and the generation phase. The goal of the training phase is to adjust the weights of the neural network using training dataset. In the generation phase new data is produced that can, but don’t necessarily have to be conditioned on some generation input, which can be for example a sequence of notes that the generated melody should begin with, the melody for which the model should create accompaniment, or even lyrics.

Long Short-Term Memory neural networks

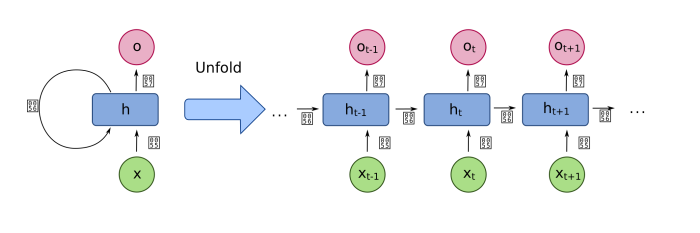

To understand what is an LSTM, we should start with recurrent neural networks (RNN).

RNN is a neural network used for processing sequential data, which makes it a good candidate for music generation model. It uses the same function for each element of the sequence, and uses results of previous computations for processing further elements.

There were attempts to create music using RNNs, but it turned out that generated pieces lack coherence and overall structure. This is a result of vanishing and exploding gradient phenomena, which cause neural network to 'forget’ what was created earlier than a few steps before.

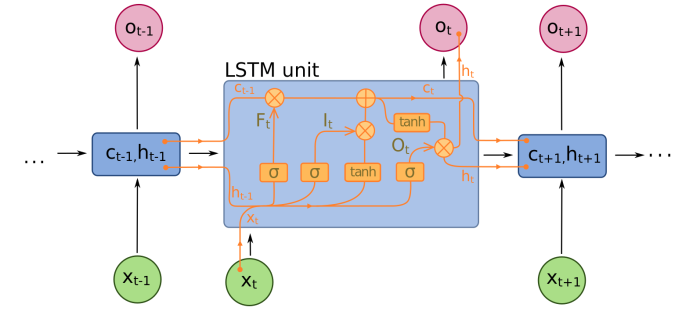

That’s where LSTMs came to play. LSTM is a subtype of recursive neural networks, which address the issue of the lack of long-term consistency in generated pieces of music, by using memory cells that are capable of remembering information for a longer period of time, and gates that control the flow of information. These modifications let LSTM learn long-term dependencies much better than regular RNN.

If you are interested in a more detailed theoretical explanation of LSTM, you can find a detailed article here

LSTM was widely used for music due to its simplicity in comparison to more modern approaches, but it became obsolete upon the creation of transformers and other advanced models, as they don’t manage to create long and well-structured pieces.

One example of an LSTM-based solution is Magenta’s MelodyRNN project.

A detailed article describing how to generate music using LSTM with Keras can be found here.

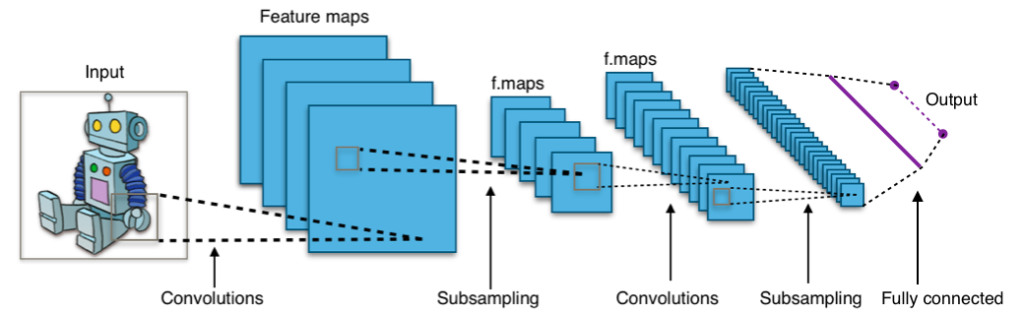

Convolutional Neural Networks (CNNs)

Convolutional neural networks were originally used mostly for image recognition, however they can also be used for music processing. CNNs base on the operation of convolution, which means applying small predefined filters to subsequent parts of data in order to extract specific patterns.

In music processing, convolution over periods of time is used, unlike in image processing, where convolution is applied to fragment of images. A noteworthy example of using CNN with music is WaveNet.

WaveNet (DeepMind)

WaveNet’s primary purpose is to generate a human-like speech system, however it was also trained for music generation with a dataset of piano recordings.

WaveNet uses audio in a raw waveform as an input to a convolutional neural network with different convolution dilation factors in different layers. Growing dilation factors allowed to capture thousands of timesteps and feed them to the network as a base for predicting the next value. After prediction, the generated value becomes a part of input data for the next iteration.

This model generates audio signal timestep by timestep, and typical bitrate of music is in the order of thousands or tens of thousands, which makes the generation process quite slow, as an enormous amount of values must be predicted by the network to create just a second of music.

Generative Adversarial Networks (GANs)

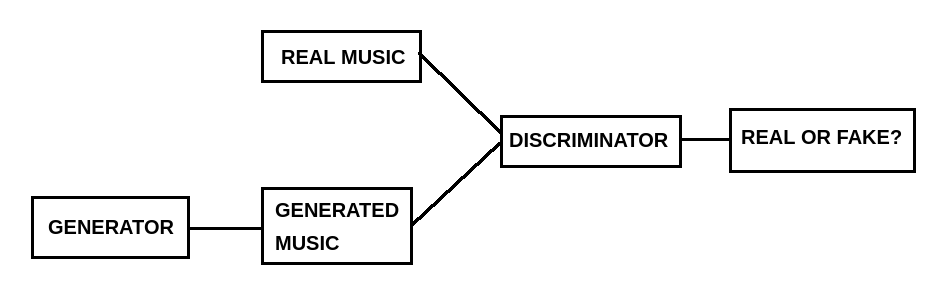

GANs consist of two neural networks: the generator and the discriminator. The generator, as its name suggests, is responsible for generating samples of music, while the discriminator’s responsibility is to decide if its input is a real piece, or a sample generated by the generator.

At the beginning the discriminator is trained on a dataset, and the generator is seeded with randomized input. Then, the generator creates a sample, and feeds it to the discriminator to decide if it’s real or generated. After that, backpropagation is applied to both networks independently, so the generator can learn to create samples that are closer to reality, and the discriminator becomes better at recognizing which samples are fake. After the training, the generator should be able to create new samples that sound similar to music created by humans.

An example of GAN being used for generating multi-track music in a symbolic domain can be found in the MuseGAN project.

Variational autoencoders

Autoencoders are a class of neural networks that are used to reduce the dimensionality of input data to a specified size in such a way that it’s possible to reconstruct the data with as little loss as possible. Autoencoders consist of an encoder responsible for dimensionality reduction, and decoder which reconstructs the data.

Variational autoencoders are similar to regular autoencoders, with one difference – instead of learning how to compress the data to a concrete vector, the encoder produces probability distribution parameters (means and variations). Basically VAEs learn probability distribution of encoded representations of training data which allows them to be used for generating new samples of data, that are similar to data used for learning. Generation is done by taking a sample from the learned distribution, and passing it to the decoder.

VAEs are used for example in Magenta’s MusicVAE project.

Jukebox (OpenAI)

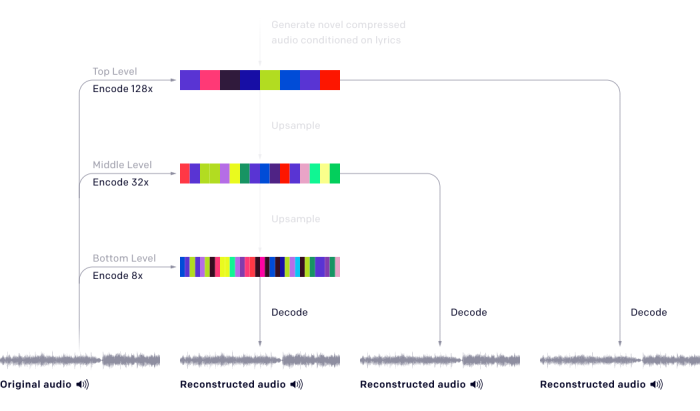

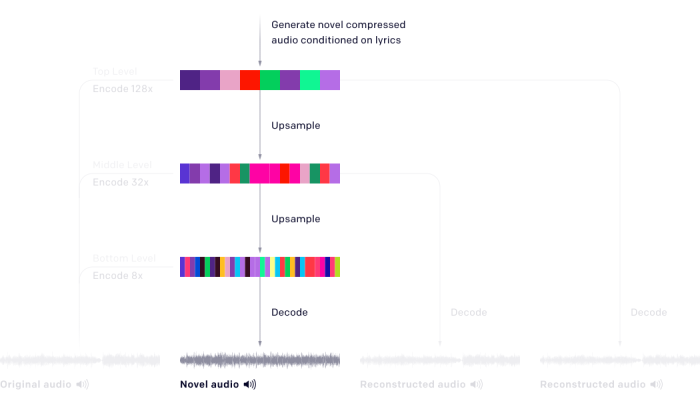

Jukebox generates music in a raw audio domain, using a modification of variational autoencoder called VQ-VAE, where VQ stands for vector quantization. This mechanism uses a trained codebook, which is a fixed-sized set of vectors with the same dimensionality as the encoded data – after encoding each encoded sample is mapped to the closest vector from a codebook.

The Jukebox model consist of three independent VQ-VAEs that reduce input data 8 times, 32 times and 128 times, to be able to capture not only the overall structure, but also the details of a performance of a song.

After VQ-VAEs are trained, there is a possibility of music generation which can be conditioned by the artist, genre and lyrics. It is done by generating an encoded top level sample, passing it through upsamplers, and then passing it to the decoder. The model uses variants of transformers for creating compressed samples and for upsampling.

Transformers

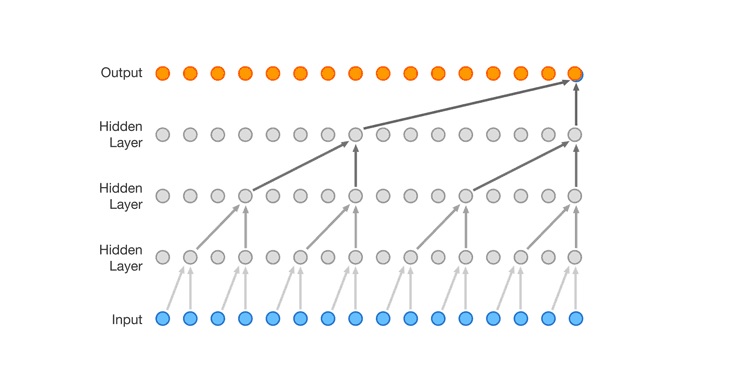

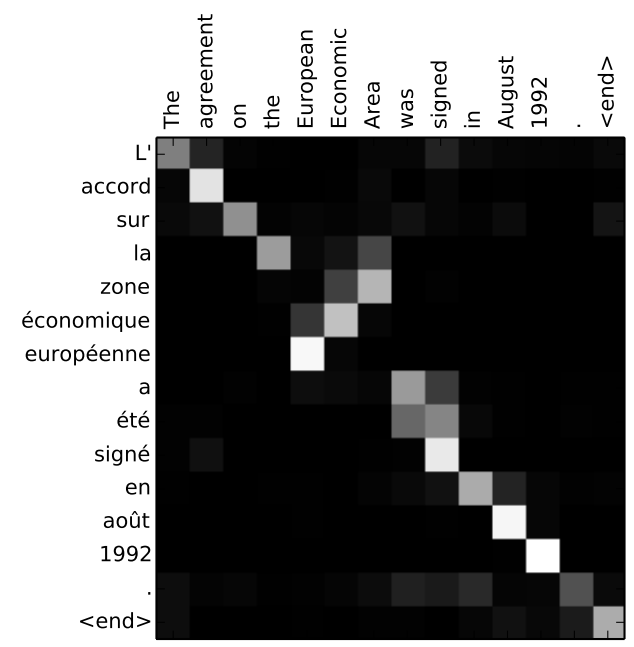

The Transformer is a model based primarily on the attention mechanism, which was first introduced in neural networks designed for language translation to facilitate making decisions based on context. Attention is a mechanism that connects elements of an output to elements of an input, or, as in the case of music generation models, with previously generated music events, and weights of those connections are learned during the training phase. It provides context which, for example in music generation area, allows created pieces to be much more consistent.

The image above visualizes how the attention mechanism work for English-French translation of a sentence.

The process of music generation with transformers works analogically to text generation, where events are treated as words, and musical phrases are created in a similar manner to sentences.

Music Transformer (Tensorflow Magenta by Google AI)

Magenta’s Music Transformer is a variant of the transformer model with relative attention. Relative attention means that the position of each element of a sequence, in this case a musical event (eg. the event of playing a note) is represented in relation to other elements of a sequence, unlike in the basic variant of the transformer, where positions of elements passed to attention matrices are absolute. This improvement facilitates keeping track of regularities in the structure of music the model is processing.

The picture above comes from the Music Transformer paper and shows how the currently played note was influenced by previous notes.

The Music Transformer is the core part of another interesting project of Magenta – Wave2Midi2Wave. It takes piano recording as the input, transcribes it to MIDI using Onsets and Frames model, and passes this MIDI as input for the Transformer. Generated music is then converted back to audio with the WaveNet model conditioned on given MIDI.

MuseNet (OpenAI)

MuseNet is another transformer-based model for music generation in the symbolic domain working on MIDI files. It is a 72-layer network with 24 attention heads and full attention over a context of 4096 tokens. During training, the network was also fed with information about genre, instruments and composers, and this information allows mimicking the style of a particular artist in a newly generated song, or blending completely different styles to generate something unique.

Conclusion

I presented only a few of the most interesting projects in a field that is so big that it would take a whole book to cover properly. In most of the linked projects there are samples of generated music available, so you can check yourself how advanced the technology currently is. In my personal opinion, as for now, artificial intelligence has not yet outdone humans in artistic creativity. Machine-generated pieces, though very interesting, are not as complex, well-structured, and pleasant for the ear, as products of human creativity. However, the field is developing faster than ever, more and more computational resources are available, and big companies are working on making AI better at composition than humans, so it is probably just a matter of time when all the top charts will be occupied by AI artists. Will it be sooner or later? We will see.

References

- https://benanne.github.io/2020/03/24/audio-generation.html

- https://magenta.tensorflow.org/

- Cheng-Zhi Anna Huang et al. – Music Transformer (https://arxiv.org/abs/1709.01620)

- Vaswani et al. – Attention is all you need (https://arxiv.org/abs/1706.03762)

- https://www.analyticsvidhya.com/blog/2020/01/how-to-perform-automatic-music-generation/

- https://medium.com/artists-and-machine-intelligence/neural-nets-for-generating-music-f46dffac21c0

- https://salu133445.github.io/musegan/model

Poznaj mageek of j‑labs i daj się zadziwić, jak może wyglądać praca z j‑People!

Skontaktuj się z nami